When you hear the word computer, you probably think of the powerful desktops, laptops, smartphones and tablets that are common today. However, did you know that the history of computers, and their predecessors, extends all the way back to the fourteenth century? Prior to the year 1935, the term “computer” did not refer to a machine, but rather to a person who would “compute” math problems . Following 1935, however, a computer was considered a machine that translates input into output, and can process and store information. How did this difference come around?

Early Computers

The earliest ancestor of the modern computer is the abacus. It is believed to have been invented by the Chinese around the year 500 B.C.E., and the modern abacus became popular around 1300 C.E. The abacus was a predecessor to calculators; it helped people to figure mathematical problems quicker than in their heads or by counting on their fingers. It could perform simple functions such as addition, subtraction, multiplication, and division, but it also could help to perform the more complicated functions of finding square roots and solving fractions.

The abacus is a distant relative of computing, but there was an invention almost 200 years ago that was extremely similar to the modern computer. This invention is the Difference Engine. The Difference Engine was designed in the 1820s by Charles Babbage, an English astronomer. As an astronomer, there were numerous complicated calculations that Babbage had to complete. There were various tables and charts that astronomers needed

to consult in order to complete these calculations, and the tables often contained errors, which could mean death for sailors using them. Babbage had the ambitious idea of designing a machine to calculate the tables, therefore creating more precise and accurate tables to use. The Difference Engine computed multiple equations at once to provide a single output. Similar to modern computers, the Difference Engine was capable of storing information for a short period.

Machines during World War II

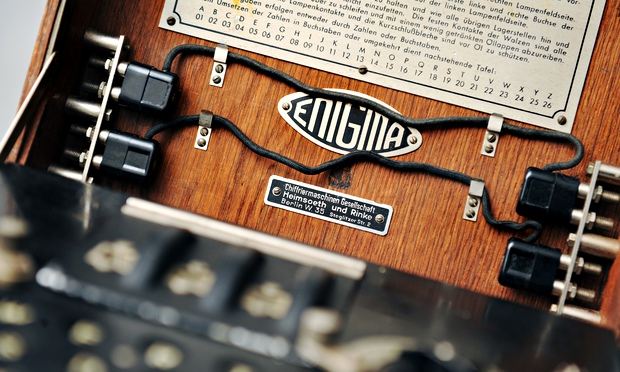

As time passed and approached more modern times, more complex and powerful computers were developed. During the late 1930s and early 1940s, both the Axis and Allied powers of World War Two used computers to code and decode transmissions. The Germans utilized their Enigma machine to write a code that would be unbreakable by hand. Luckily, Poland was able to devise a machine that was capable of cracking the daily German code in under two hours. The Poles passed on the information to the British before the German

invasion of Poland. Eventually, the Germans figured out how the machine, called the Bombe machine, broke the code and changed their code to make it more secure. As a result, the British had to design a new machine. Alan Turing, a British mathematician and codebreaker, designed an improved Bombe that could break the new and improved German code faster than the Polish Bombe.

Development of Computers

Prior to 1950, the driving force behind the development of new and more powerful computers was for use by the military. In addition to the codes discussed above, machines were also used to complete trajectory charts. All of the new weapons produced during World War II needed charts detailing how to aim it in different weather conditions, and these charts took up to six months to complete by hand. With computers, however, the charts could be completed much faster. Yet the driving force changed in the early 1950s, when the first computers designed for use in businesses such as accounting or banking were invented. The computers were extremely large, heavy, and expensive; adjusted for inflation, they cost almost $4 million. Later in the same decade, the invention of transistors allowed for the replacement of vacuum tubes in computers, which allowed for much smaller and less power-hungry machines.

In the 1960s, computers became even smaller and inexpensive than the invention of transistors allowed. Robert Noyce and Jack Kilby are credited with the invention of the microchip. This new technology dramatically decreased the price, allowing for small businesses, and even some individuals to purchase their own computers. Microchips led to the production of microprocessors, an important step towards the development of personal computers.

Because the microchip had not been invented, PCs did not become widespread until the 1970s. The Atari 8800 was one of the first popular personal computers, and soon other companies began getting into the market. In 1977, multiple new PCs came out, such as the Commodore PET, TRS-80, and the Apple II. These three models were referred to collectively as the “Trinity,” and sold millions of units. The Apple II was the only one with a full color display, and became the best-selling model in the Trinity.

The first portable computers hit the market in the 1980s. The first of these was 23.5 pounds with only a 5 inch display. Obviously, these specifications were not ideal for a “portable” computer, and they continued to evolve into the Ultraportables we have today. The first portable computer with a flip-open screen, like today’s laptops, was produced in 1982, but the term “laptop” first became popular after it was used in marketing the Gavilan SC in 1983. After the release of the Commodore SX-64, the first color laptop, in 1984, the screen resolution and color vibrancy rapidly increased.

Modern Computers: Microsoft and Apple

In 2016, the two most popular types of computers are Microsoft’s Windows machines and Apple’s Macs. The two companies compete for business now, but over the years they have periodically both cooperated and competed. In the early 1980s, when the Macintosh was first in development, Microsoft was making so much software for Apple that Bill Gates claimed he had more people working on the Mac than Steve Jobs did. However, the relationship soured when Microsoft began working on an operating system to rival

Apple’s. When Jobs was ousted from Apple, Microsoft dominated the computer industry while Apple became less and less relevant. Although, when Apple brought Jobs back, Jobs announced a new deal which kept Microsoft Office available for the Mac, and brought on a new period of cooperation between the two tech giants. As time passed, Apple slowly began to dominate the market, and the personal relationship between Jobs and Gates deteriorated. Now, both Apple and Microsoft have new leaders, and now that the personal competition is removed, the two companies are starting once again to work together.

Computers have evolved from primitive calculating machines to complex devices capable of running multiple programs simultaneously. So too has the reason for developing computers changed. At the outset, computers were seen as a way to assist people in work, or do work for them. Once the first computers were developed, however, the focus changed. Throughout the 1970s, 1980s, and still today, tech companies are trying to invent computers that are faster and more powerful than models currently available, while trying to fit them into a thinner, smaller frame. This push for smaller yet more powerful technology is not only for consumer convenience. The true driving force is for the companies to make profit. As companies compete for business, a way to draw people to their company is to develop more desirable technology.

Works Cited

“Abacus and Its History.” UCMAS. Web. 03 Apr. 2016. <http://ucmas.ca/our-programs/how-does-it-work/abacus-and-its-history/>.

“Alan Turing: Creator of Modern Computing.” BBC. Web. 5 Apr. 2016. <http://www.bbc.co.uk/timelines/z8bgr82>.

“Bombe.” Crypto Museum. Web. 05 Apr. 2016. <http://www.cryptomuseum.com/crypto/bombe/>.

Chapman, Cameron. “The History of Computers in a Nutshell.” Six Revisions. 21 Apr. 2010. Web. 05 Apr. 2016. <http://sixrevisions.com/resources/the-history-of-computers-in-a-nutshell/>.

Freiberger, Paul A. “Difference Engine.” Encyclopedia Britannica Online. Encyclopedia Britannica. Web. 05 Apr. 2016. <http://www.britannica.com/technology/Difference-Engine>.

“Introduction to the Slide Rule.” Eric’s Slide Rule Site. Web. 04 Apr. 2016. <http://www.sliderule.ca/intro.htm>.

Kapko, Matt. “History of Apple and Microsoft: 4 Decades of Peaks and Valleys.” CIO. 7 Oct. 2015. Web. 11 Apr. 2016. <http://www.cio.com/article/2989667/consumer-technology/history-of-apple-and-microsoft-4-decades-of-peaks-and-valleys.html>.

Nadworny, Elissa. “The Slide Rule: A Computing Device That Put A Man On The Moon.” NPR. NPR, 22 Oct. 2014. Web. 03 Apr. 2016. <http://www.npr.org/sections/ed/2014/10/22/356937347/the-slide-rule-a-computing-device-that-put-a-man-on-the-moon>.

“Overview.” The Babbage Engine. Web. 05 Apr. 2016. <http://www.computerhistory.org/babbage/overview/>.

“Slide Rule History.” Oughtred Society. 14 Dec. 2013. Web. 03 Apr. 2016. <http://www.oughtred.org/history.shtml>.

Montecino, Virginia. “The History of Computing.” The History of Computing. George Mason University. Web. 01 Apr. 2016. <http://mason.gmu.edu/~montecin/computer-hist-web.htm>.

“What Is an Abacus?” UCMAS. Web. 03 Apr. 2016. <http://ucmas.ca/our-programs/how-does-it-work/what-is-an-abacus/>.

Images:

http://images.sixrevisions.com/2010/03/06-01_computers_nutshell_lead_image.jpg

https://upload.wikimedia.org/wikipedia/commons/e/ea/Boulier1.JPG

http://www.computermuseum.li/Testpage/Babbage-Difference-Engine.jpg

http://www.retroland.com/wp-content/uploads/2014/05/apple-ii.gif

http://www.xyonglobal.com/wp-content/uploads/2015/05/apple-microsoft.jpg